We are hiring! Check out our open positions.

Changing the way machines understand the world around them.

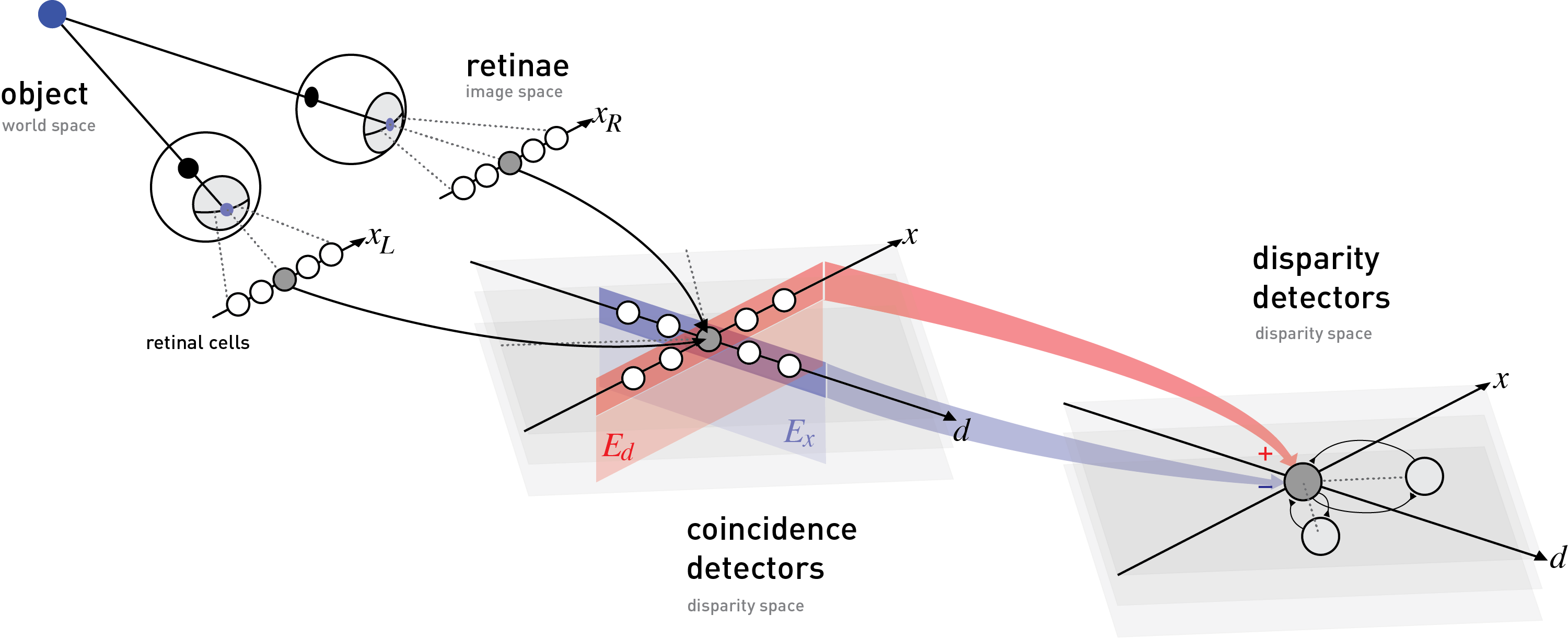

The human visual system provides an incredible, general-purpose solution to sensing and perception. But that does not mean it cannot be improved upon.

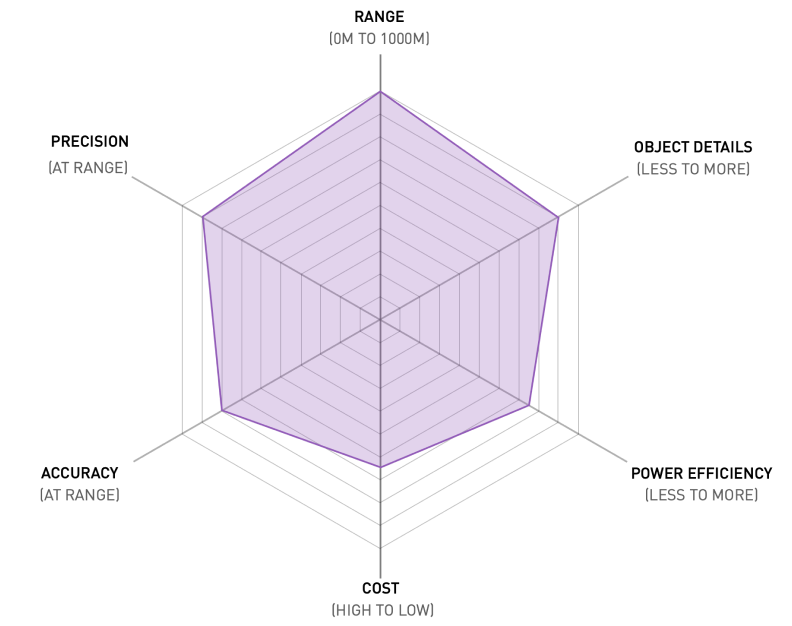

Humans have two eyes, on a single plane, with a relatively small distance separating each eye. Unlike humans, a camera array is not limited to only two apertures, a specific focal length or resolution, or even a single, horizontal baseline. By using more cameras, with wider and multiple baselines, a multi view array can provide better scene depth than the unaided human eye. Cameras can also be easily modified for a given scenario, providing optimal depth accuracy and precision for a respective use case or application.

Cameras that see what is invisible to the human eye, such as Long-wave Infrared (LWIR) or Short-wave Infrared (SWIR), can also provide better-than-human capabilities. As with visible spectrum cameras, their non-visible counterparts can be used in a camera array with similar depth perception benefits.

There is an existing and broad ecosystem of camera component suppliers and integrators that are relentlessly improving performance, reliability, and cost across multiple industries. Their pace of innovation is another source of continuous improvements for multi-camera systems.

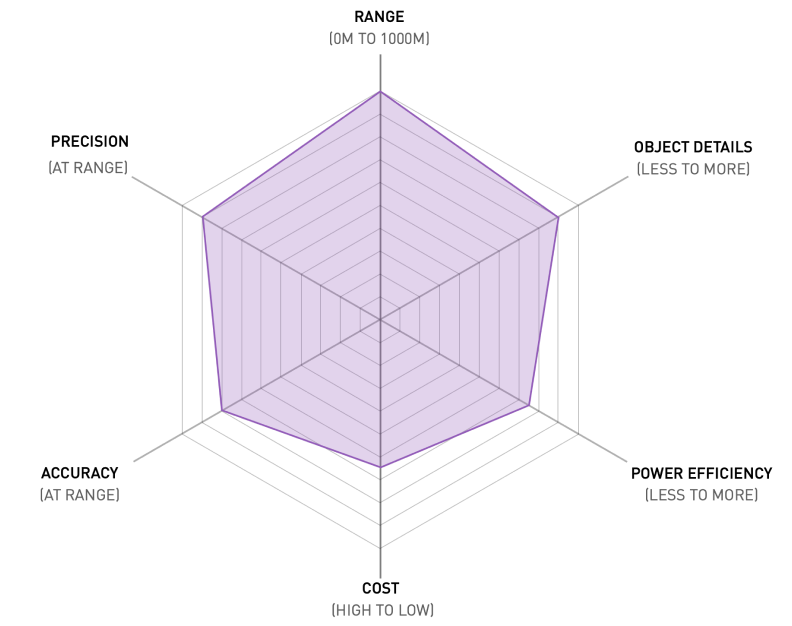

A popular technology for depth sensing in many markets comes with several shortcomings, many of which are exacerbated at long range.

Shortcomings

Touted to be an all-weather solution with conditions such as temperature and humidity having no effect, it’s capabilities are limited.

Shortcomings

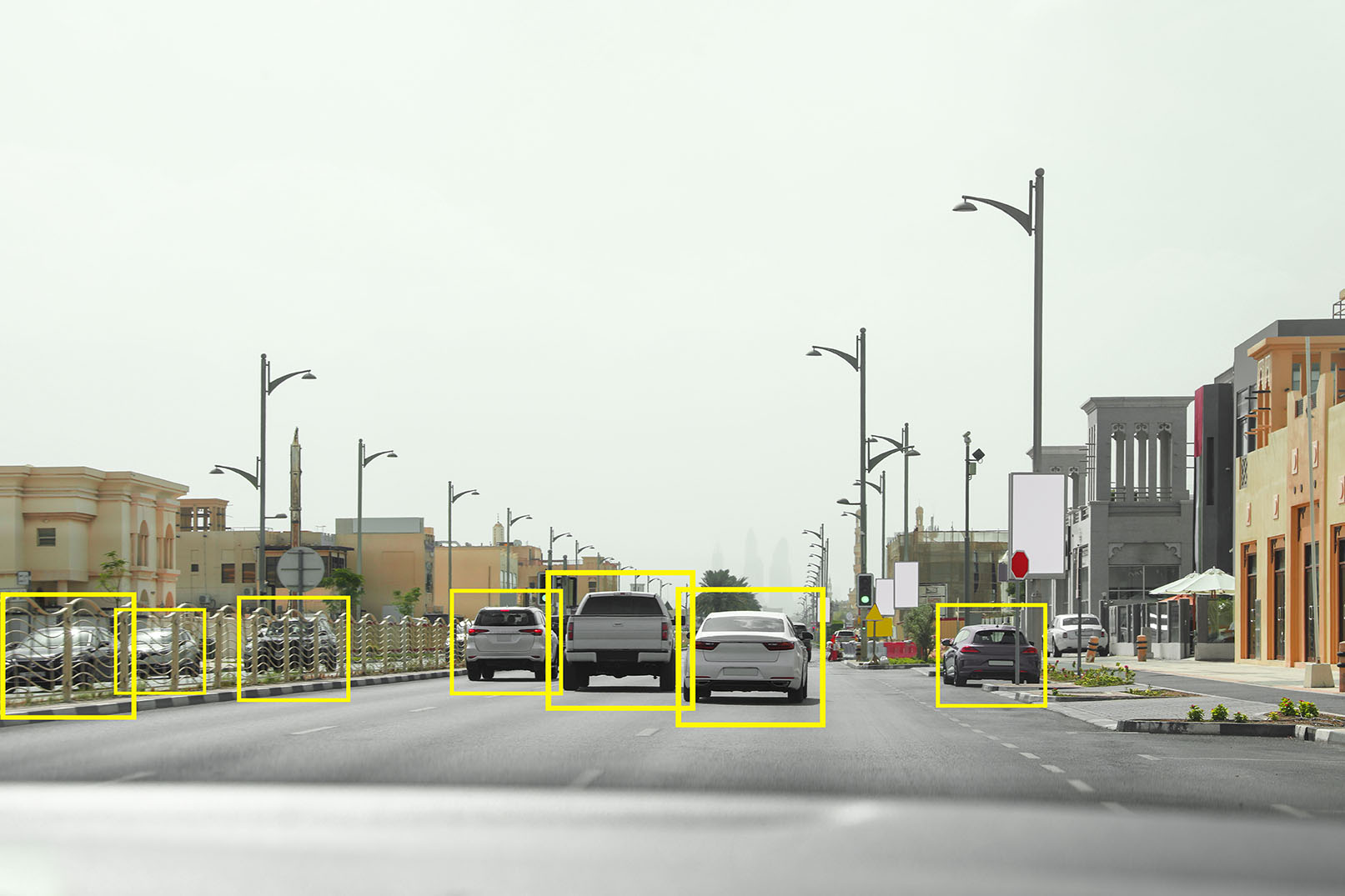

Monocular vision systems often utilize AI inferencing to segment, detect, and track objects, as well as determine scene depth. But they are only as good as the data used to train the AI models, and are often used in-conjunction with other sensor modalities or HD maps to improve accuracy, precision, and reliability. Despite such efforts, "long tail" edge and corner cases remain unresolved for many commercial applications relying on monocular perception.

Shortcomings

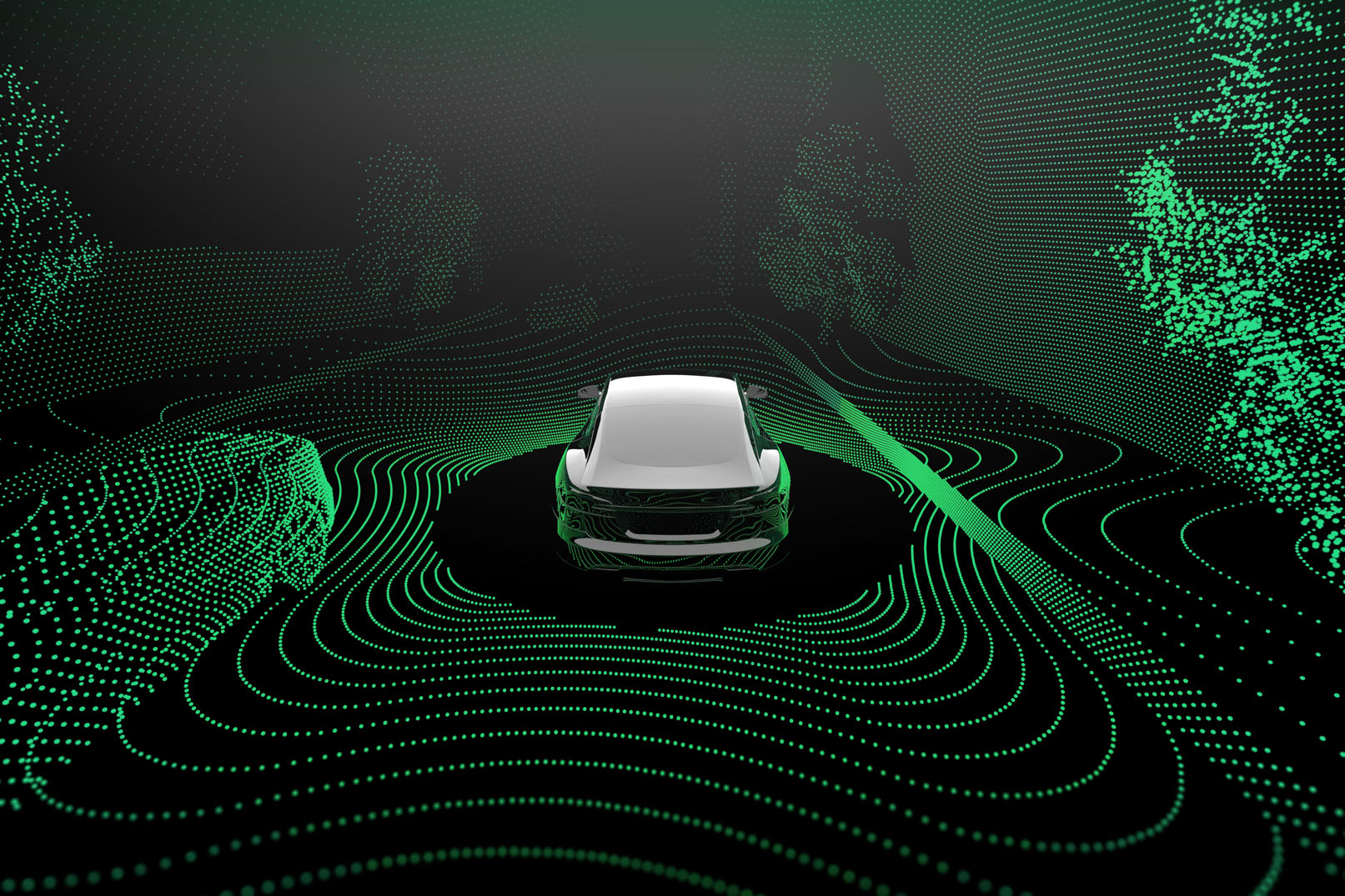

Mines, farms, and warehouses have a multitude of challenging edge-cases. Measured depth is key to improving system efficacy in such dynamic environments.

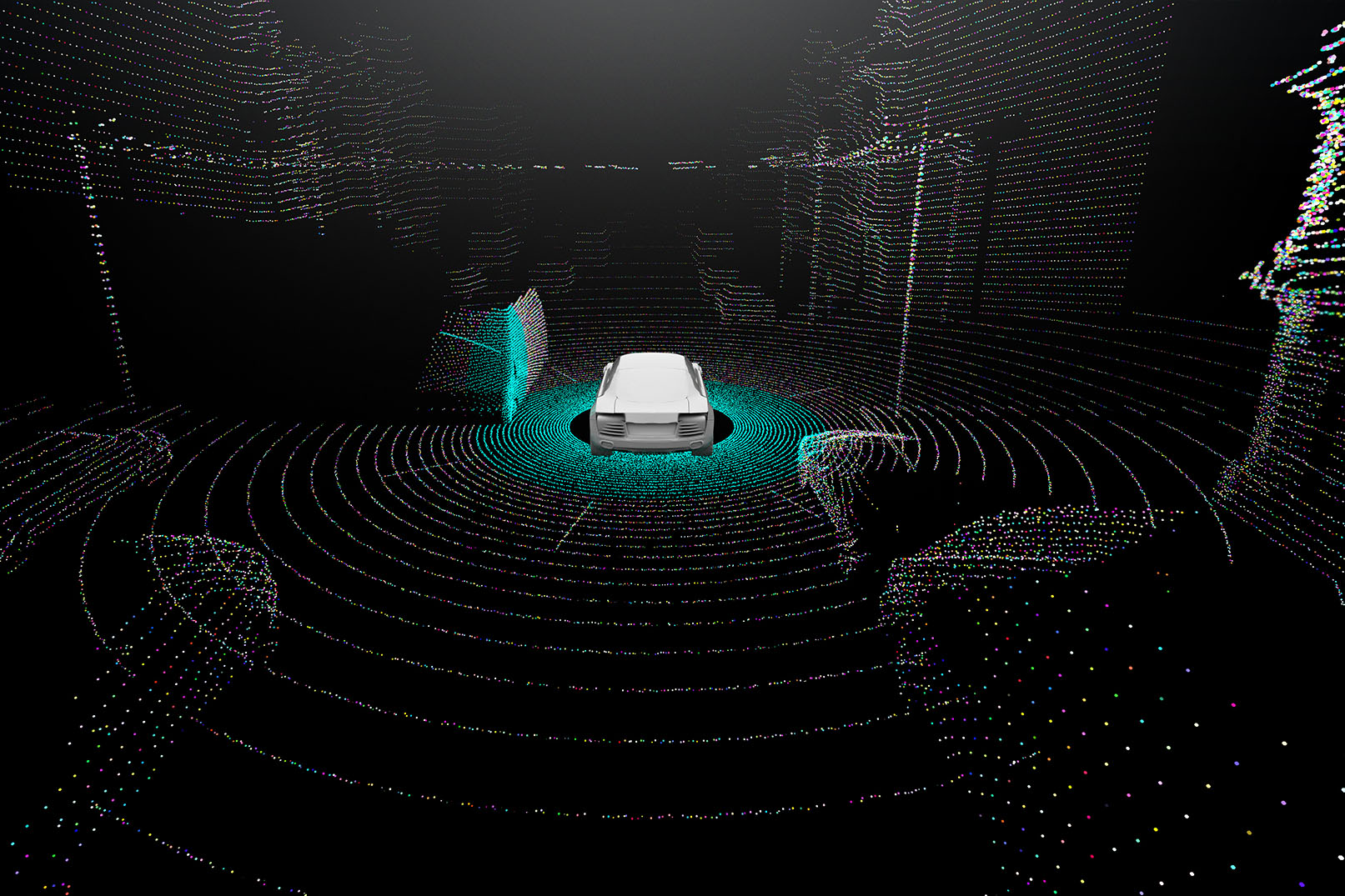

For the next generation of ADAS or self-driving cars and trucks, true scene understanding is crucial to efficiency and safety.

Urban environments are as complex as they come. Perception is fundamental to maintaining situational awareness, and object depth and velocity are key elements in such operational domains.

Any machine that needs to understand its operating environment will benefit from improved perception.